The first project undertaken at HAL (Human Augmentation Lab) was the development of a rudimentary brain-computer interface (BCI) using clinical-grade technologies.

We used the Muse EEG headset to detect whether a subject was relaxing with her eyes closed or focusing with eyes opened. These states were mapped to the navigation of a Neato mobile robot, such that it would move forward when the user has her eyes closed and otherwise remain stationary.

Project Details:The project can best be described in four steps.

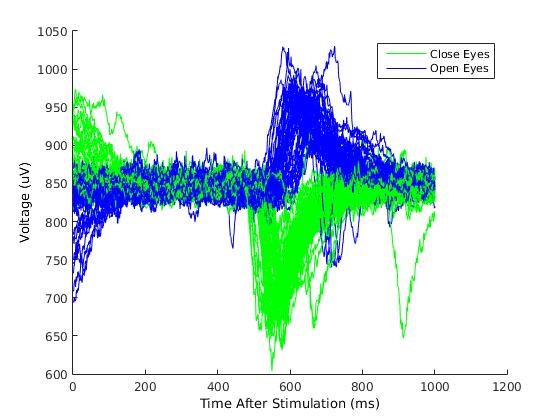

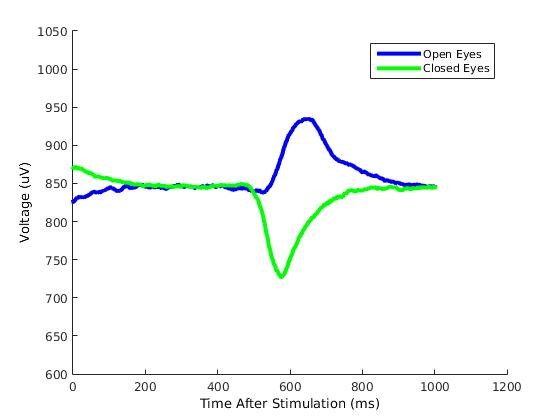

First, data was collected for the training period. Over the duration of a few minutes, a graphical interface repeatedly cued the user to either close or open her eyes. As she followed the instructions, a Muse electroencephalogram (EEG) headset non-invasively recorded the subject’s brainwaves from four electrodes placed along the forehead. A photodiode circuit secured to the monitor display was used to accurately detect when the stimulus was shown onscreen such that only the behavior from immediately after a stimulus would be analyzed for classification.

Seconds, the following training procedure was applied to the recorded data to identify maximally different patterns between the two user states. All of the signal processing and analysis was performed in BCILAB, a MATLAB toolbox. After preliminary epoching and filtering, the Spectral Common Spatial Pattern (CSP) technique was used for feature extraction, and a statistical model of the feature distributions using Linear Discriminant Analysis (LDA) was constructed. The model was trained and evaluated using Mean Square Error with cross-validation.

Third, the training model was applied to new data for live classification. As the user opens and closes her eyes, the processing schema predicts which of the two classes the user is performing in real-time and casts the prediction to a live output stream. The system as described above performed well in capturing the transition between states via event-related potentials, but poorly represented longer-term oscillatory processes pertaining when an individual sustained either position.

Finally, the classification output stream was mapped into robot commands. Using ROS via MATLAB, a Neato mobile robot was instructed to move forward when the classifier predicted the eyes closed state and to stop when predicting eyes opened.